NVIDIA Jetson Object Detection with Jetson Inference and TensorFlow

What is Jetson Inference?

Instructional guide for inference and real-time DNN vision library for NVIDIA Jetson Nano/TX1/TX2/Xavier NX/AGX Xavier/AGX Orin.

This code uses NVIDIA TensorRT for efficiently deploying neural networks onto the embedded Jetson platform, improving performance and power efficiency using graph optimizations, kernel fusion, and FP16/INT8 precision.

Vision primitives, such as imageNet for image recognition, detectNet for object detection, segNet for semantic segmentation, and poseNet for pose estimation inherit from the shared tensorNet object. Examples are provided for streaming from live camera feed and processing images. See the API Reference section for detailed reference documentation of the C++ and Python libraries.

Locating Objects with DetectNet

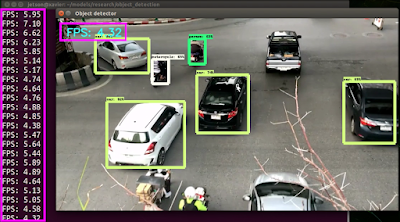

The previous recognition examples output class probabilities representing the entire input image. Next we're going to focus on object detection, and finding where in the frame various objects are located by extracting their bounding boxes. Unlike image classification, object detection networks are capable of detecting many different objects per frame.

The detectNet object accepts an image as input, and outputs a list of coordinates of the detected bounding boxes along with their classes and confidence values. detectNet is available to use from Python and C++. See below for various pre-trained detection models available for download. The default model used is a 91-class SSD-Mobilenet-v2 model trained on the MS COCO dataset, which achieves realtime inferencing performance on Jetson with TensorRT.

As examples of using the detectNet class, we provide sample programs for C++ and Python:

- detectnet.cpp (C++)

- detectnet.py (Python)

These samples are able to detect objects in images, videos, and camera feeds. For more info about the various types of input/output streams supported, see the Camera Streaming and Multimedia page.

Pre-trained Detection Models Available

Below is a table of the pre-trained object detection networks available for download, and the associated --network argument to detectnet used for loading the pre-trained models:

| Model | CLI argument | NetworkType enum | Object classes |

|---|---|---|---|

| SSD-Mobilenet-v1 | ssd-mobilenet-v1 | SSD_MOBILENET_V1 | 91 (COCO classes) |

| SSD-Mobilenet-v2 | ssd-mobilenet-v2 | SSD_MOBILENET_V2 | 91 (COCO classes) |

| SSD-Inception-v2 | ssd-inception-v2 | SSD_INCEPTION_V2 | 91 (COCO classes) |

| DetectNet-COCO-Dog | coco-dog | COCO_DOG | dogs |

| DetectNet-COCO-Bottle | coco-bottle | COCO_BOTTLE | bottles |

| DetectNet-COCO-Chair | coco-chair | COCO_CHAIR | chairs |

| DetectNet-COCO-Airplane | coco-airplane | COCO_AIRPLANE | airplanes |

| ped-100 | pednet | PEDNET | pedestrians |

| multiped-500 | multiped | PEDNET_MULTI | pedestrians, luggage |

| facenet-120 | facenet | FACENET | faces |

note: to download additional networks, run the Model Downloader tool

$ cd jetson-inference/tools

$ ./download-models.sh

In this demo use only DetectNet.

NVIDIA Jetson Nano with Jetson Inference ( DetectNet )

Inference Performance

NVIDIA Jetson Xavier NX with Jetson Inference ( DetectNet )

Inference Performance

TensorFlow Object Detection API Creating accurate machine learning models capable of localizing and identifying multiple objects in a single image remains a core challenge in computer vision. The TensorFlow Object Detection API is an open source framework built on top of TensorFlow that makes it easy to construct, train and deploy object detection models. At Google we’ve certainly found this codebase to be useful for our computer vision needs, and we hope that you will as well.

TensorFlow 1 Detection Model Zoo

We provide a collection of detection models pre-trained on the COCO dataset, the Kitti dataset, the Open Images dataset, the AVA v2.1 dataset the iNaturalist Species Detection Dataset and the Snapshot Serengeti Dataset. These models can be useful for out-of-the-box inference if you are interested in categories already in those datasets. They are also useful for initializing your models when training on novel datasets.NVIDIA Jetson Nano with TensorFlow Object Detection

Inference Performance

NVIDIA Jetson Xavier NX with TensorFlow Object Detection

Inference Performance

Compare NVIDIA Jetson Nano vs. NVIDIA Jetson Xavier NX

Reference