NVIDIA Jetson YOLO Object Detection

Demos showcase how to convert pre-trained yolov3 and yolov4 models through ONNX to TensorRT engines. The code for these 2 demos has gone through some significant changes. More specifically, I have recently updated the implementation with a "yolo_layer" plugin to speed up the inference time of the yolov3/yolov4 models.

What is a YOLO object detector?

When it comes to deep learning-based object detection, there are three primary object detectors you’ll encounter:

- R-CNN and their variants, including the original R-CNN, Fast R- CNN, and Faster R-CNN

- Single Shot Detector (SSDs)

- YOLO

You Only Look Once: Unified, Real-Time Object Detection

https://arxiv.org/pdf/1506.02640v3.pdf

YOLOv4

With the original authors work on YOLO coming to a standstill, YOLOv4 was released by Alexey Bochoknovskiy, Chien-Yao Wang, and Hong-Yuan Mark Liao. The paper was titled YOLOv4: Optimal Speed and Accuracy of Object Detection

Author: Alexey Bochoknovskiy, Chien-Yao Wang, and Hong-Yuan Mark Liao

Released: 23 April 2020

YOLOv3

YOLOv3 improved on the YOLOv2 paper and both Joseph Redmon and Ali Farhadi, the original authors, contributed.

Together they published YOLOv3: An Incremental Improvement

The original YOLO papers were are hosted here

Author: Joseph Redmon and Ali Farhadi

Released: 8 Apr 2018

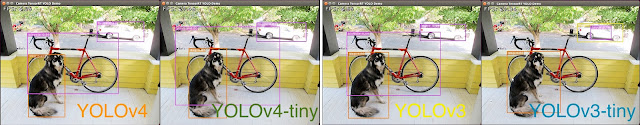

We’ll be using YOLOv3 , YOLOv4 in this blog post, in particular, YOLO trained on the COCO dataset.

The COCO dataset consists of 80 labels.

YOLOv3-416

Image : 4.46 FPS.

Video : 4.35 FPS.

YOLOv3-tiny-416

Image : 22.30 FPS.

Video : 20.58 FPS.

YOLOv4-416

Image : 4.46 FPS.

Video : 4.35 FPS.

Image : 24.09 FPS.

Video : 22.33 FPS.

YOLOv4-tiny-416

For NVIDIA Jetson Nano

Image : 22.41 FPS.

Video : 20.47 FPS.

Image : 47.93 FPS.

Video : 43.13 FPS.

Compare Performance

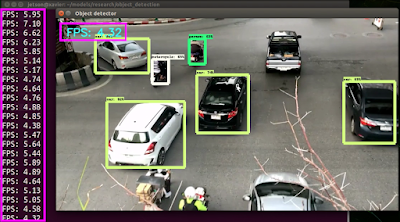

Run NVIDIA GPU GTX1070

YOLOv4-416 ( TensorRT FP16 )

YOLOv4-tiny-416 ( TensorRT FP16 )

Compare Performance

NVIDIA GPU GTX1070 Object detection with YOLOv4-tiny-416

Reference

TensorRT demos

https://github.com/jkjung-avt/tensorrt_demos

You Only Look Once: Unified, Real-Time Object Detection

https://arxiv.org/pdf/1506.02640v3.pdf

YOLOv3: An Incremental Improvement

https://arxiv.org/pdf/1804.02767.pdf

YOLOv4: Optimal Speed and Accuracy of Object Detection

https://arxiv.org/pdf/2004.10934.pdf

DarkNet YOLO

https://github.com/AlexeyAB/darknet

YOLO Object Detection

https://pyimagesearch.com/2018/11/12/yolo-object-detection-with-opencv/

Website : https://softpower.tech